OpenCAPI Opens New Realm Of Data Handling- Valutrics

IBM, Google, HPE, Dell, and others have combined resources to promote the OpenCAPI standard, which aims to boost the performance of data center servers tasked with analyzing large amounts of data.

(Click image for larger view and slideshow.)

Applications analyzing machine data, a busy website’s traffic, or big data want to drink that data from a firehose, not a straw. For that, IBM, Google, and partners have launched OpenCAPI, the open source Coherent Accelerator Processor Interface.

Intel’s traditional x86 architecture started out as the basis for personal computers and has been adapted for use in servers. But its data handling characteristics didn’t envision needs that would be generated through e-commerce, web, and data analysis systems.

The OpenCAPI standard is aimed at counteracting those limitations.

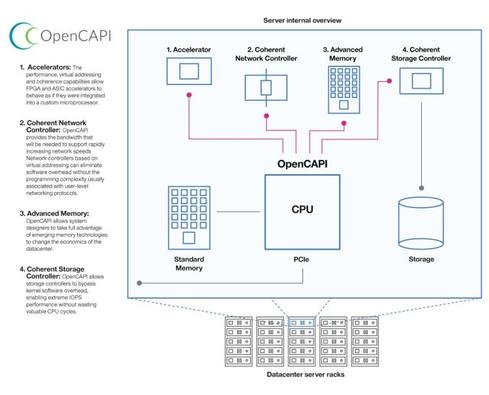

It allows accelerator boards to be linked to a server’s CPU and main memory, and move data at high rates of I/O between them. The result is a server architecture that’s been modified enough to move data at up to 10X its previous speeds, a spokesman for the newly formed OpenCAPI Consortium said during the Oct. 14 announcement.

(Image: Vladimir_Timofeev/iStockphoto)

“This data-centric approach to server design, which puts the compute power closer to the data, removes inefficiencies in traditional system architectures to help eliminate system bottlenecks,” according to the announcement.

Among the consortium founders are Dell EMC, AMD, HP Enterprise, IBM, and Google. Other founders include:

- Mellanox Technologies, a supplier of network accelerator processors

- Micron, a supplier of high speed memory devices

- Nvidia, a graphical processing unit maker

- Xilinx, a supplier of field programmable gate arrays

Noticeably absent from the consortium is Intel, the dominant supplier of x86 server architecture.

By producing OpenCAPI and illustrating an ecosystem around it, IBM and the other hardware manufacturers are potentially enlarging markets. Server makers who wish to speed data throughput will need to turn to an OpenCAPI manufacturer for accelerator devices.

OpenCAPI has been engineered to be compatible with the PCIe bus in x86 servers and to complement it. OpenCAPI will be compatible specifically with PCI Express 4.0, the next generation of the bus, due out in early 2017.

Likewise, the first accelerator devices based on OpenCAPI and PCIe 4.0 are still pending. In fact, the first servers incorporating those devices aren’t expected until the second half of 2017.

IBM plans to offer servers based on its Power9 chip and OpenCAPI next year. Google and Rackspace announced at the OpenPower Foundation Summit in April that they will produce servers for their data centers based on the Power9 and OpenCAPI architecture. In an Oct. 14 blog post, John Zipfel, technical program manager for the Google Cloud Platform, announced Google had completed its first specification for the Zaius server.

Compared to the 16 megabit-per-second speed of the PCIe bus, CAPI devices will move data at 25 Mbps speeds. But the real data acceleration comes from CAPI’s ability to integrate the data acceleration or memory device directly into the server architecture.

For example, solid state disks linked to an OpenCAPI device can be treated the same as system memory, increasing its data throughput rate to the processor. Without OpenCAPI a solid state disk must be treated as an external or secondary system. The server goes through thousands of CPU cycles to draw its data into main memory or a CPU.

(Source: OpenCAPI Consortium)

With OpenCAPI, those thousands are reduced to the few cycles needed by main memory itself.

[Is this the latest IBM bid to resurrect its Power architecture? Read IBM Seeks Power Server Revival.]

Steve Fields, chief engineer of IBM Power Systems and member of the OpenCAPI Consortium board, wrote in a blog post:

An OpenCAPI device operates solely in the virtual address space of the applications it supports. This allows the application to communicate directly with the device, eliminating the need for kernel software calls that can consume thousands of CPU cycles per operation.

OpenCAPI devices and servers incorporating them are likely to find frequent use on the internet of things in machine learning and analytics applications, image recognition processing, and neural networks.

OpenCAPI devices will have cache coherency with the server’s main memory, keeping data in the two different pools synchronized and enabling the CPU to call data from either one without a built-in delay.

Patrick Moorhead, contributor to Forbes, wrote on Oct. 14: “Today, a bevy of tech industry giants announced a new server standard, called OpenCAPI … This is big, really big.“